EuroIA 2014 Keynote

The following was delivered as the closing plenary address at the European Information Architecture Summit in Brussels, Belgium on September 27, 2014.

—

I was asked here today to reflect on the past ten years of our practice in the field of information architecture.

It is my opinion however that to only go back that far in our reflection would be doing this fine community a true disservice. It should also not surprise anyone in this room that if you ask an information architect to reflect on 10 years, she might not be able to stop there.

So instead of ten years, I want to reflect on a much broader timescale. I want to talk with you about the ways in which information has been architected since we first found the need to start talking about information back in the sixteenth century.

Don’t be fooled by my historical framing, I am not a qualified historian and I assure you that our journey isn’t intended to inform you of information’s history.

My intent, instead, is to ask one not-so-simple question:

Why aren’t we good at this yet?

1500

In the sixteenth century, the printed word inspired humankind to make systems to share our knowledge more widely than ever thought possible before then. During this pivotal century, we saw the emergence of the basic structures that would allow us to share lists of resources, learn languages, gain a sense of place, and agree on what day it was.

It was at this point in history that information as a topic of conversation started to emerge. At this time, information was a bit more true to its name. It was any form that informed. The only criteria for being information was that it inform. If it did not inform, it was not information. It was nonsense.

It was here, at the true dawn of the information age that a group of brave-hearted lexicographers started to emerge in pursuit of standardization of language. Without these beautiful people willing to wrangle a seemingly un-wrangleable mess, we would not have modern language and many would argue, we wouldn’t have modern anything else. These brave souls had to wrangle a crazy stream of nonsense that was running out of the mouths of their users before that was even thought possible.

Well guess what? Lexicographers are still trying to catch up with that crazy stream of nonsense running out of our collective mouth. Our languages are still emerging, they are still in flux.

Linguistic insecurity is a reality we all face at one time or another. This is the all to common fear that our language does not match the one of our context. It’s that not-so-pleasant feeling that we all get when someone talks over our head.

As a of field of practice focused on upholding the integrity of meaning, we must pay close attention to an emerging trend.

This trend is being seen on projects as much as in thought-leadership. The trend is the avoidance of clear definitions of language used in a specific context; the easy-button of making up a new word instead of searching for one that might exist. Our lack of attention to the ontologies we are weaving within our work is killing our momentum and widening the gap between understanding what we do and not.

I go to a lot of conferences these days and get to talk with a lot of people. Many who approach me have one thing in common: a sense of relief that they aren’t crazy for feeling like there should be a concept like information architecture. They just didn’t know what to call it yet.

People are searching for the words to type into the Google. Hiring managers want to put the right words on their job req to get the right talent. Yet, we complain about being reduced, about being mis-represented or in some cases about being marginalized.

The linguistic insecurity that we feel around the term information architecture is our fault. It is a mess that we made.

And like the brave-hearted lexicographers of the sixteenth century, we are going to have to start to control the stream of crazy that is currently running out of our collective mouth if we are going to make information architecture important to those outside of rooms like this.

1600

By the seventeenth century, the information world was starting to really take form. We saw the advent of news media, isogonic lines finally allowed us to pinpoint actual places on maps. We recognized the need for systems like higher education and postage.

We also experienced the dawn of machines, and with them, the dawn of technical manuals. Yes, even Galileo had to write these beauties.

Until this time, we didn’t see statistical graphs emerge. Just try to imagine a world where we had illuminated manuscripts, beautiful realistic paintings of human life, an emerging global economy, and marvelous architectural structures but your eye had never before seen a chart or graph. When I think about what this must have been like, my mind boggles. Charts and graphs, and visual comparison in general have been essential to our way of life for centuries.

Charts and graphs are not just for the data scientists. They are meant to put data into the hands of the non-data savvy. Charts and graphs are arrangements by which we receive information we could never see from a list or a paragraph.

Most importantly perhaps, the advent of statistical graphs taught us the power of information. In the mid 1960s Darrell Huff warned readers that data left to its own devises, even when statistically arranged can wreck havoc on a readers understanding.

Fast forward to where we are today and to say that things have improved would be to take the position of a spin doctor in this house of worship.

We have more tools than ever before, sure. And no one would argue that we may have more data than we know what to do with. But to think that we have less misunderstandings, less mistrust of data, less statistical discomfort in the world today when compared to the world of the seventeenth century, that would be a true stretch of the imagination.

It seems that in the same breath that we learned how to wield data for good, we also realized that it can be used for evil. And I won’t sit here and tell you all to train your children in the art and science of statistics, instead I will warn you about the willingness that future generations will have to listen to what we tell them. I give to you my favorite statistic to share with them…

87.8976% of all statistics are total lies.

1700

At the dawn of the eighteenth century we were finally blessed with the fruits of all that statistical labor and data gathering. Information was starting to work for us. We were finally able to use data we had prepared to predict what might be coming our way. Literally, Haley’s comet. In 1705, British astronomer Edmond Halley demonstrated that the comets of 1456, 1531, 1607, and 1682 were all one and the same comet, he also predicted that it would return again in 1758 but he died before the world would know that he was right.

Another interesting development during this time was the emergence of the first documented editorial board as well as the establishment of taxonomic standards for librarianship, biology and measurement. The world was starting to believe that everything could be categorized, classified and organized.

It was through the introduction of these shared taxonomic standards that we started to understand the true complexity involved in dissecting our world. The paths that were carved with this early work still haunt us to this very day. Because taxonomies are inherently filled with judgement. And judgement is always subjective. You can make whatever rules you want, but if someone doesn’t agree with your classification of things, that’s their prerogative.

The freedom to think of the world as we wish is one of the greatest gifts that we have as human beings. But it is also the greatest plight that we face as information architects.

We must constantly struggle to question our own biases. We must remember to leave our knowledge at the door in favor of seeing through some one else’s lens, however murky. We must make sense of messes that others wouldn’t have a fighting chance against.

Something as seemingly simple as organizing a grocery store can become incredibly difficult when the disagreements of taxonomy that normally can be ignored or conflated in a physical medium are suddenly staring us in the face in the digital world. The advent of the web and an implementation of taxonomy and labeling on it go hand in hand. There has never been a web without these things. There has barely been a world without these things. Yet here we are 2300 years after Aristotle sent his students out to classify everything in existence, and we are still not able to decide if fruits and vegetables are useful labels for our modern dietary and shopping habits.

This is a screenshot from a service called Fresh Direct. They are known as a leader in their field.

But how could leaders of a field like grocery service not know that Avocados, Beans, Peas, Corn, Cucumbers, Eggplants, Mushrooms, Peppers, Squash, Zucchini and tomatoes are not vegetables? I mean that’s just insane, right?

Well, no, not so much.

The more important question for Fresh Direct is this:

How many American consumers of delivery groceries think about these items as vegetables?

An understanding of their user’s mental model regarding buying produce is of utmost importance to a company as successful as Fresh Direct. With the exception of avocado, they don’t even mess around with cross-listing these anomalies as both fruits and vegetables. Because they additionally understand the idea that if everything is a fruit, then nothing is.

Do they care that a botanist trained in Linnaean taxonomy would not be able to find a tomato on their website? No. Would that botanist really struggle to apply a new taxonomy to the context of a grocery website. Probably not.

We are actually much more flexible in our use of taxonomies than our diagrammatic techniques let on.

Even those of us that wield taxonomies for a living. And we struggle still to use taxonomies effectively and efficiently to make sense of our world. Because we are still discovering things about this world we live in, and until that stops the endless iteration on our classifications of reality will continue.

1800

Marked by the opening of the library of congress in 1800, the nineteenth century would shape much of the world we live in today. Innovations in image processing, operational research, the birth of computer programming as we know it all showed signs of an information-laden future. The mid nineteenth century was the first time you could be in one location and hear the current weather in another.

Train transport and railway expansion was a whole new industry. With those trains came train schedules, the posting of train routes and the introduction of an entire service industry rising up around commercial transport. The world was starting to get smaller. Information was starting to travel faster. With trains we could turn weeks into days, even hours.

Reuters began about this time, claiming to beat the railroad by 6 hrs with a fleet of 45 carrier pigeons. The first distance learning program was started. Around the same time the first business school revealed itself as needed.

Subways, stock tickers, and the telephone created a need for real-time information displays for the first time ever. Switch boards, phone books and data tables had to be created for all this new information that had to be distributed. New jobs were being introduced to serve the needs of these new industries of service and information. Conductors, programmers and operators were trained on brand new technology promising to forever change our way of living.

In the late nineteenth century we saw our comfort with this new found way of life bring forth a whole new set of channels for our expression of information.

Microphones and the phonograph forever changed what we think of when we think of a piece of music. Before their invention, people considered a composition to be a piece. Now we consider a specific performance to be a piece. With one composition leading to many pieces.

The mimeograph was invented around the same time, which allowed us to do for print, what the microphone allowed us to do for sound. We could replicate our thoughts faster and more efficiently. We could give them away more freely. We could react to the world in a more real-time way. Moving pictures would forever alter our sense of time and place, and allow us to slow down and speed up our world at our whim. Radio and the telegraph would exponentially widen the range in which real-time information could travel. Animation put the bug into our ears that we could create moving, breathing worlds plucked right out of our imagination.

By the close of the nineteenth century, you could get the weather in another town and find the right train to get there. You could project things from your imagination into new broadcast channels that would spread them near and far without you needing to be there to help it along. You could even mail a question to a service called Mundaneum and a well-researched result would be mailed back for a small fee.

Many of the inventions of the nineteenth century are still cornerstones of our life today. And at this point many of the forms that inform us have been turned to digital. But have we gotten any better at explaining train transport? In some cases, maybe. But the truth is, most of the train schedules you run into in 2014 are still a confusing pile of data to sift through.

Even a service as slick as Google is full of unsightly seams that can create anxiety on the part of a traveler in a foreign country just trying to get to Paris from Brussels. Sorry Google, I had to manage without you this time.

Since the dawn of information we have accumulated and discarded so much stuff , but perhaps there is no more forgotten pile of awesome than that of the things that were invented during this amazing time period.

Public transport as a concept is not going away, and yet we still suck at it. Phones are more important now than ever before but what we mean when we say phone has changed forever.

There are still a million downloadable apps for the newest, more accurate, most insightful transit app. There are a million weather apps and websites to tell you what the weather is like right now anywhere you can name. But how many of them are good? How many of them are thoughtful? How many of them are truly forms that inform? And how many of them are just nonsense? How many of them are just ads with some data sprinkled on top?

Our nineteenth century brothers and sisters would have little trouble making it around in our world today. And I don’t think that’s such a good thing considering how much we seem to think the world has dramatically changed since then.

1900

The twentieth century is where we really start to see attention paid to information as workable medium. And practices started to emerge to work through the complexities of this medium.

Information graphics was a subject of gaining interest and usage in the worlds of business, marketing and news media. Many of the diagrammatic techniques we still use today in those fields were taught to readers widely for the first time in an instructional book first published in 1919 by Willard C Brinton.

In “Graphic Methods for Presenting Facts”, Brinton spends equal time on the construction of accurate diagrams as he does on the rhetorical power of choosing how to construct and present diagrams.

It is also about this time that Science Fiction starts to predict a world much different than the one of the nineteenth century.

In 1909 English writer E. M. Forster wrote The Machine Stops. It described a world where people live underground, with a Machine running their lives entirely. In this tale, the author anticipates instant messaging, videoconferencing and television. The punchline of this dark dystopian story is that the only book that the main character owns is an enormous technical manual about “the Machine.”

In 1939, a pivotal science fiction novel revealed a bit more about the future of working with information as a medium. In Pygmalion’s Spectacles, Stanley Weinbaum writes:

Of course nobody knows anything. You just get what information you can through the windows of your five senses, and then make your guesses. When they’re wrong, you pay the penalty.

— in this excerpt, we are the you he is referring to. The makers of form. The ones who want the right message to travel.

At this point, not even half way through the twentieth century, we were starting to understand the true complexity of organizing massive amounts of content for distribution across messy human networks with hopes that the right subjective information would pop into the heads of everyone that our message meets. As a result we were starting to dream of a future where our problems would be solved by algorithms, robots, and computers.

In 1949, the term virtual reality emerged to describe these other places we would be spending more and more time going to. About the same time, the term information overload was also coined, to describe an emerging sense of unrest that all this change and access to this sketchy material called information was bringing with it.

By the late 1950s we are swimming in a sea of technical jargon. We had coined terms like software, GUIs, trackball, super computers, bugs, hackers, and video games. Language in 1959 was getting eerily close to the world that science fiction had predicted. Life was suddenly on fast forward. Information and channels were accumulating everywhere and anywhere there was whitespace.

In the nineteen sixties the world was taught complex concepts like hypertext, hypermedia, and multimedia. Email was first experimented with in select academic and scientific communities. The words “information” and “architecture” were first starting to be put together in the technology industry at this time. With IBM’s research team holding the first sighting of a definition for this emerging retronym, information architecture, in 1964.

The world seemed to be preparing itself for something big and by the nineteen seventies we had the first online communities springing up around everything from academics to gaming. People in select numbers were starting to spend time in virtual places. Places made of information.

By the early nineteen seventies, students using ARPANET had already started to move us out of the digital-only use of the internet. They were playing with the idea of eCommerce. Ok well seriously, it is pretty well documented that they just needed a good way to buy weed cross-country.

The emergence of this type of situation was a strong sign of the times to come where life, work and play would all collide into a ever-smaller box on your desk, and eventually a pane of glass in your pocket.

We had started to create virtual extensions to our physical world. Places where we could be alternate versions of ourselves. We created services that catered to this new virtual/physical existence. We began to ask questions of place differently then ever before.

In 1976, about the same time that weed was creating the first need for eCommerce, Richard Saul Wurman was giving an important address at the American Institute of Architecture conference. In it he brings the attention of his audience to an emerging need in the architecture of the twentieth century. The need he described was an architecture of information.

In 1989, Mr. Wurman started to warn us that:

…what is information to one person is data to another.

In his amazingly influential book “Information Anxiety” he describes an emerging black hole between data and knowledge. He asks that the world start to prepare itself for an impending Tsunami of information making its way to our shores. And without naming it and saying it is so, he leaves us with the core lessons that would become the foundation of a field of practice known as Information Architecture.

It had felt like technology was a swelling elephant in every room for quite some time, but the web was the final straw that would break the backs of many business models.We had made the world wide web a world wide phenomenon. And it came with a staggering learning curve that many would spend the next decade or more dealing with.

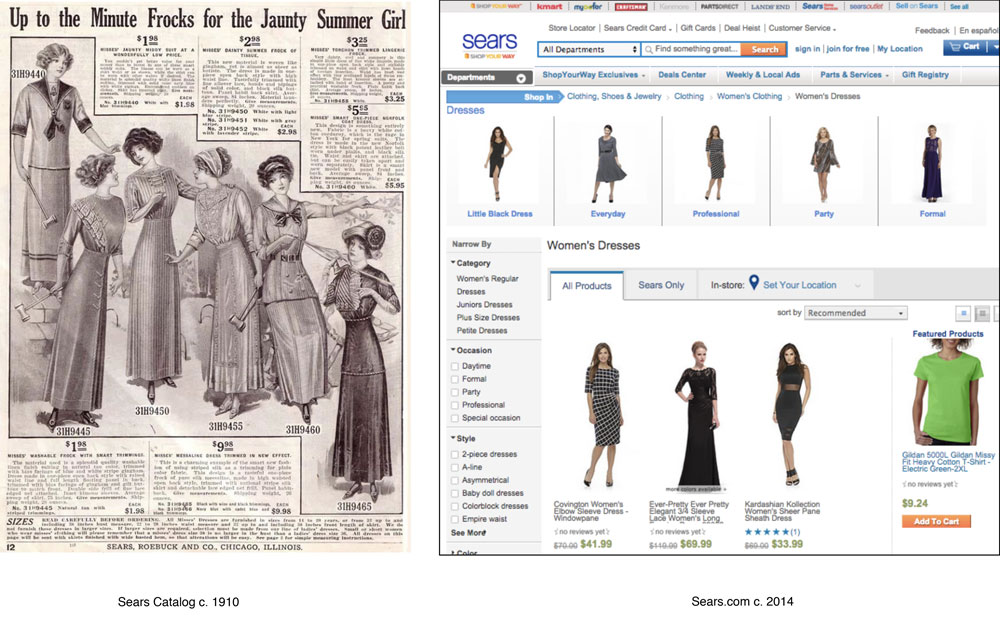

Sears, Roebuck & Co. was an American phenomenon. They were an institution.

At first it seemed simple, in 1997 the team at Sears decided it made sense to put digital pictures of their catalog pages onto the internet. Shortly thereafter they agreed that uploading the weekly circulars for distribution there would probably make sense. They didn’t stop paying to print the catalog, they didn’t change the way they ran the stores. It was a relatively small change.

Fast forward to 2014, and you don’t need to read American business news or work on the Sears.com team to know that something isn’t right here. Look at the mess they have made of their website. Dan Klyn and I once had a client with a taxonomic ailment similar to Sears.com. He described it in our heuristic evaluation as “asking for wall to wall carpet, and getting carpet up the walls.”

Unfortunately, the team at Sears.com seems to be pursuing the same strategy as their catalog team was back in 1910. From an outsider that strategy seems to be “Prove we have the most stuff for sale.”

In the nineties, we had started to realize the power of this new kind of connectivity and started to consider how it might impact our lives if we were to let information in even more fully. Not just to find a train schedule or the weather, but what about for finding a mate or somewhere to live or a new job? What about doing all those errands I have to put pants on and got out to do now.

Could this magical world wide web finally be the pants-free-errand-running-heaven we had dreamed of since the dawn of all this modern mess?

Users, as we think of them now, were born in this moment. And we had a growing share of the power. The world was small enough that suddenly, you didn’t have to be big to make a difference. We were all able to be publishers if we wanted to be. We didn’t even need permission or our own computer. All we needed was an internet cafe.

We had made places for the web to live in within our physical spaces. We had brought it into our life enough to add it to our list of places to go. We had started to expect things to make sense across all the various channels in our complex lives. It would still be years until we could take our internet with us on the go, but we were at this point increasingly starting to talk about it when we weren’t there.

We started to wonder what it would be like to actually live inside these virtual realities. We started to talk about going “online” — “taking things offline” — and using words like “visit” and “chat” in whole new ways. Visiting started to mean looking at something online. Visit us online became common vernacular in conversation and signage across numerous contexts. Chatting was no longer always verbal. We could chat with our fingers, as well as with our mouths. We were starting to create digital versions of ourselves for use in games, chatrooms and online dating sites. We suddenly had places to go without ever leaving our homes. Those places needed real architectures. As users, we had no idea that the architecture of the early web was holding us back.

By the end of the nineties, an emerging community of designers, thinkers and technologists were really starting to push the web as more than just a communication and publishing platform. They were designing a web that was truly transactional. A web that was meant to be omnipresent. A web that would become the ultimate city that never sleeps.

It was about this time that the mainstream news media started to wonder aloud about where the lines should be drawn between on and offline life. Parents were worried for their children. People across dozens of industries began to worry about their own futures. We worried that perhaps this was all headed towards the dystopian version of the future we had invented in our art and films. We were just starting to consider the ramifications of existing in a world truly made of information. A world made of something so inherently subjective, a material so full of flaws. A medium that had such a tough time being molded and manipulated.

And we were terrified by the wave of what we suddenly saw coming our way. But we had long since opened Pandora’s box-o-digital; tipped the first domino of Moore’s law.

By the close of the twentieth century, the average person sent and received 733 pieces of paper mail per year. Half of which was typified as junk. There were 248 million users already on the internet — and while a mere 4% of the world’s population was browsing the web, many more were losing sleep, time and money to Y2K preparation. All because of the information anxiety that missing just 2 digits would reap on an entire world economy.

In 1999 a small but important change happened. We started to recognize domain names as a form of physical property. You could actually own a virtual place made entirely of zeros and ones. It seems like this was about the time that we had finally started to realize that this web thing wasn’t going anywhere.

It was not only here to stay, but it was here to challenge everything we knew about commerce, social interactions and content publishing and retrieval.

I graduated from high school in the spring of the year 2000. I attended my first college design classes that Fall at Northeastern University in Boston. I lived in the same dorm building where Napster had been invented the year before.

I was introduced to the concepts of Multimedia, Typography and Information Architecture all in the same week. At this point, as a 17 year old who had come of age during the birth of the consumer web, I knew all about hyperlinks, used words like software and hardware with native familiarity. I knew how to use fonts and make digital images. Heck, I even knew PageMaker and CorelDraw (yes I said it) like the back of my hand.

But I didn’t know how to think about these tools that I had so easily found and learned to use. I didn’t know how to be thoughtful yet.

I didn’t yet know how to make a form that would inform.

Until I heard important (and Googleable) terms, like Information Architecture, I didn’t possess the keys I needed to unlock a whole new world that was not findable before. I didn’t yet have the power to invoke and weaponize my skills to create understanding.

And so my career as an information architect began, with more tools than I knew what to do with and a list of questions so lengthy that I was pretty sure would annoy everyone around me.

But that’s the thing about being inexperienced, we are far too likely to assume that someone has already figured everything out for us. So there was 17 year old me, picking up bits and pieces here and there and applying them to whatever work I was doing in whatever medium.

In 2004, I graduated with a dual major in graphic design and multimedia. At the time, I don’t even think my professors knew what would become of such a strange hybrid. But I found my way to my first job as an IA.

One of the first days I can remember as a professional IA was spent printing out and reading articles from the web.

I remember thinking:

What a fun job I have!

See I was doing all this printing and reading on an actual work assignment. Something new and amazing had happened in web technology and my manager had asked me to look into its implications on the IA work that we were doing.

It seemed that some really smart folks had apparently made it possible to change a page of a website without having to re-render the entire page. It was the next hot thing. The thing that promised to be all the rage. At the time it was called AJAX, I later learned this was a buzz term coined to describe asynchronous Javascript calls which had existed for quite a bit longer than the buzz.

This new thing was being rumored as the dawn of a new web.

A Web 2.0.

I still remember the first time I read that idea. It was like a cold shiver went up my spine. Ten years later, it is a laughable artifact and an overused buzz word from a long time ago.

Now at this point in my existence as an IA, every arrow on a site map was there to mean a page load. Seldom did I have a deep conversation about an arrow. It was the boxes that IAs focused on. The arrows belonged to the developers.

But with the words of prominent bloggers of the time in my head, I started to grok that the arrows were now much more important, and perhaps much less important at the same time.

As I was having that realization in an office park in New Hampshire, some of you were gathering in a room in this very city, to kick off the conference I now stand before. The words on this tag cloud are very possibly the same as a cloud that could have been created from the conference content from that very year.

It was only a decade in the past, that we had to once again stop and think about the fact that this technology we had created was starting to turn into something none of us could understand or make much sense of anymore with the tools and methods that we had. When we started to question the arrows on our maps ten years ago, IA’s foundation had started to wobble.

People started to wonder if the practice of information architecture would be needed in a world driven by animated load sequences, experiential interfaces, searchable databases, client-side data and transclusion instead of a world driven by hierarchical classifications, images plus text equals content, and server side data calls.

The same people who were once in charge of designing rigid navigational hierarchies were now invited to play in other sandboxes. They started to talk about motion and interaction’s impact on design. Suddenly all the other designers started to show up, interested in this new and supposedly now more flexible medium. Because until this point, many graphic designers wouldn’t touch the web. It was too messy. Too restrictive.

We were all just getting back on track, when technology threw us another whammy. Just three years after we could no longer rely on the arrows, our boxes were suddenly in question. Social and the mobile web were suddenly on a crash course for one another. Our boxes were suddenly social objects that needed to be resilient enough to be passed around from one device to another; shared on one network and then another.

We are now ten years after that first mention of Web 2.0 and many of the organizations that I work with still seem to be getting over Web 1.0.

They are still existing in a world where rigid taxonomies, servers, page loads and technology contracts run circles around the brave souls hoping to make sense of the waves of information crashing upon their shores.

I still have to guide them through designing hierarchies. We still have to talk about controlled vocabularies and what language to use for the labels and signposts to make sense. We still have to consider the tasks we want people to accomplish against their willingness to do so.

To be totally honest, we still have to talk about most of the same things we would have had to talk about back in 2004. But now we also have these numerous new things to fit into that same discussion. We have to now consider how they will handle mobile devices, how they will integrate with social networks, and all the other technologies that they have since invested in to manage their inventory, customer relationships and workflows. We now have to talk about how all that motion and interaction in virtual places affect performance and perception in physical places.

Just like in 2004, I still spend most days relieving information anxiety with pictures.

I still use more words to clear up intent then I do to describe solutions. And I still have this sick feeling inside like I have no idea what the heck I am doing.

But more importantly, I still believe we can collectively get better at this whole information architecture thing. All I can hope for is that my sharing this history today reminded you of the stake that we all have in the future.

I hope you walk away with an understanding that information is a responsibility that we all share. Forty percent of the world’s population, otherwise known as 2937 million people, are now going online. If current predictions are correct, we will each be sending and receiving on average 125 emails PER DAY, thats 45,000 PER YEAR by 2015. Average American adults are reporting an average of 40 hours per week in places made of information.

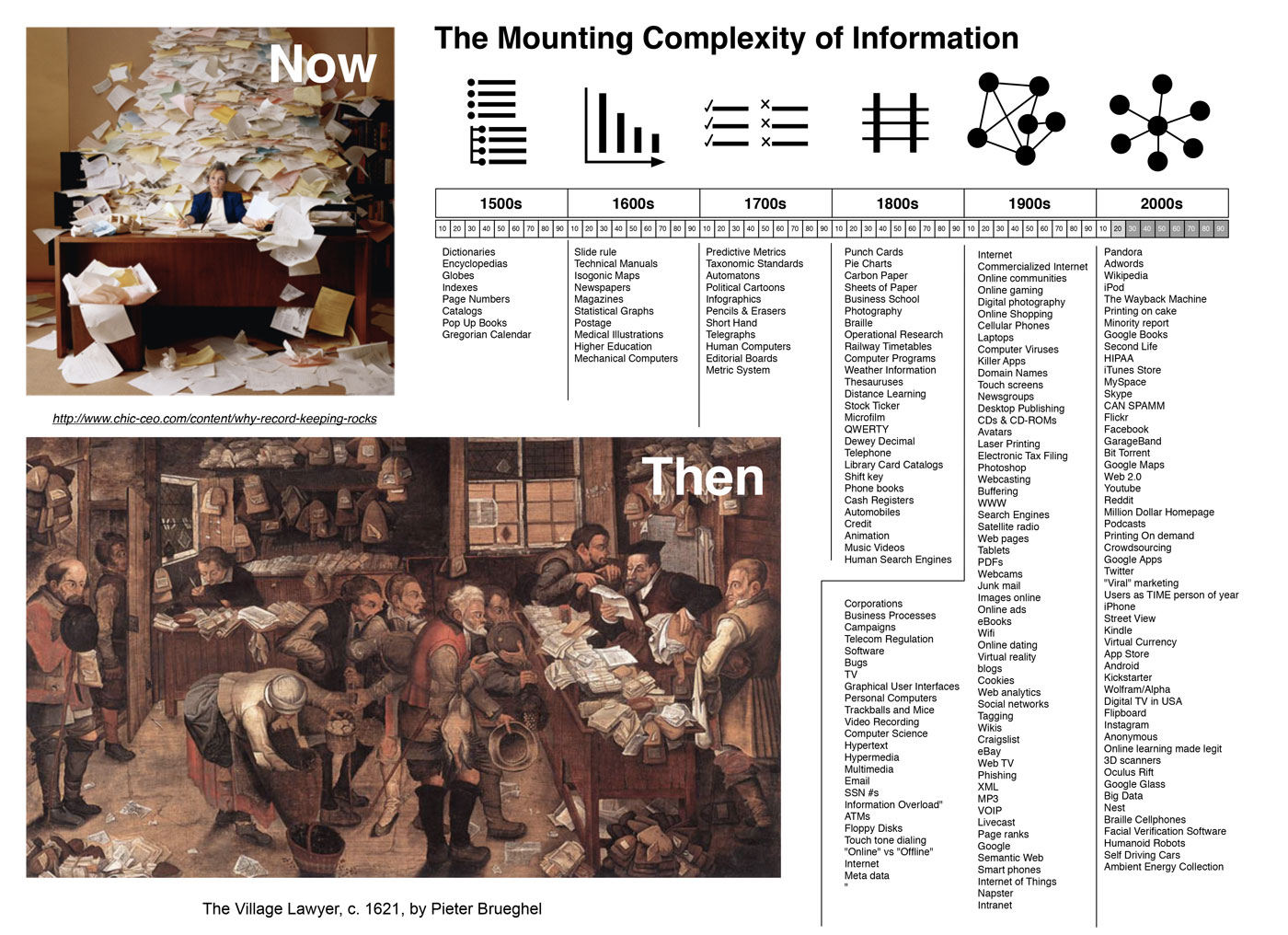

And this list of things we have architected the information of since the sixteenth century, this list is still ours to make sense of. Because there is not a single thing on this list that doesn’t apply to life in 2014. Not one.

The truth is that we have accumulated a lot and discarded very little over the last six centuries of architecting information.

And sure we might now have all the data we could ever want, but how much of it has been given form that informs?

If you think that the time for IA as a job is in the past or if you are above making sense of any of these things from our collective past, then you are losing a major opportunity to define the world we will live in next.

- We are still trying to win the battle against linguistic insecurity that has waged since the sixteenth century.

- We still struggle with the basics of separating the data from the forms that inform, like our seventeenth century brothers and sisters.

- We aren’t much better at classifying and labeling things then we were in the eighteenth century.

- We are still stuck in the same quagmires as early nineteenth century designers were around how to communicate rules, routes and roles effectively.

- We are still trying to architect the bridges between all the channels we created in the twentieth century.

- We still suck at convincing businesses to run virtual places as carefully as they run their physical places.

If history has taught you anything on this journey today, I hope it is this: We will keep expanding our existence past the boundaries we have in place today, both physical and virtual. We will continue to find new ways to project our ideas and imagination into the world. And we will continue to categorize everything that we encounter in order to make things more clear.

Most importantly:

We will not be the final generation of those driven to make sense of the world around us.

So before we move on from what we made here and leave a huge mess behind, we must impart what we have learned on those that will inherit our staggering list of things:

- We must teach the next generation that information is not a thing, it is not content, and it is not data. It is subjective truth. And what is information to one person can be data to another.

- We must teach them that good is something that they need to define for themselves, because patterns alone are shackles on innovation.

- We must teach them to consider the impact of their language choices on the things that they make. Because words matter and they tend to stick with us.

- We must teach them that structure is one of the most powerful tools of rhetoric that they have, regardless of the medium in which they work.

I started writing this talk with a question: Why aren’t we good at this yet? But I ended writing it with the hope that this is always the question we will ask. Because if we are doing this right, we will never be done and we will never stop trying.

So in ten years, I hope that some now 22 year old will be presenting their thoughts on the progress of our industry to a room of their peers in a ballroom like this one. When that moment comes, I hope to be there cheering them on. I also hope that the message is still:

We’ve only just begun.

Thank you.